- 1 Minute to read

- Print

- DarkLight

- PDF

Databricks Mapping Columns

- 1 Minute to read

- Print

- DarkLight

- PDF

Supported Data Type

| Rivery Datatype | Databricks Datatype | Mode | Is Splitted |

|---|---|---|---|

| STRING | STRING | Nullable | x |

| TEXT | STRING | Nullable | x |

| INTEGER | BIGINT | Nullable | x |

| SMALLINT | BIGINT | Nullable | x |

| BIGINT | BIGINT | Nullable | x |

| DATE | DATE | Nullable | x |

| TIME | TIME | Nullable | x |

| CHAR | STRING | Nullable | x |

| JSON | STRING | Nullable | x |

| RECORD | STRING | Nullable | x |

| VARIANT | STRING | Nullable | x |

| ARRAY | STRING | Repeated | x |

| DATETIME | TIMESTAMP | Nullable | x |

| TINYINT | INTEGER | Nullable | x |

| BOOLEAN | BOOLEAN | Nullable | x |

| BIT | BOOLEAN | Nullable | x |

| REAL | FLOAT | Nullable | x |

| FLOAT | FLOAT | Nullable | x |

| NUMBER | INTEGER | Nullable | x |

| DOUBLE | FLOAT | Nullable | x |

| OBJECT | STRING | Nullable | x |

| TIMESTAMP | TIMESTAMP | Nullable | x |

| STRING | STRING | Nullable | x |

| DECIMAL | FLOAT | Nullable | x |

Loading Unstructured Data into Databricks table

Databricks has a very powerful json extraction functions and also supports STRUCT/MAP data types. However, In order to prevent hitting limitations or rigid data types, and in order to provide a dynamic loading data from unstructured data sources (especially dynamic keys in the source - like in MongoDB), any unstructured column in mapping will be kept as “STRING” data type in the target table.

That means the table will contain some "structured" data types, like float, integer, or timestamp, alongside unstructured data type represented as STRING, such as ARRAYS and RECORDS (Objects).

In order to select JSON columns in the target table, Databricks provides a vast number of JSON functions.

Here is an example of handling JSON data in Databricks:

/*

Action table has action_calc json column with the next values:

{"name": "click", "amount": 4 }

*/

SELECT

get_json_object(`action_calc`,'$.name') AS `name`,

cast(get_json_object(`action_calc`,'$.amount') as INTEGER) AS `amount`,

get_json_object(`action_calc`,'$.nosuchcol') as `missing_col`

FROM actions;

/* Result:

| name | amount | missing_col |

| 'click' | 4 | null |

*/

Expressions

A column may also be a target of expression value. In that case, use the Expression definition on the right side of the mapping table. When an expression is used, the source column is ignored.

The expression can use other columns in the Target section at the mapping table, and any valid expression that can be used in Databricks clause.

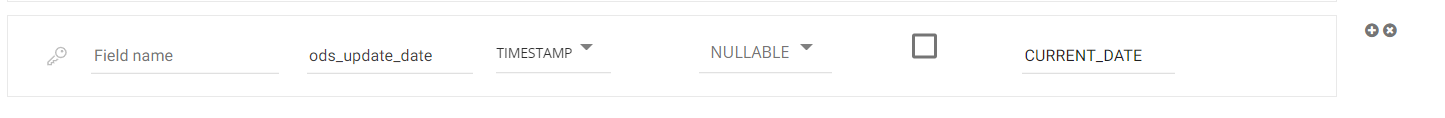

For example, the next expression calculates the table loading date by rivery, and will be targeted under ods_update_date columns:

By default, Databricks interprets empty fields in CSV files as null values. However, since it lacks a direct "null if" configuration, both NULL values and empty strings from the source are loaded as empty strings in Databricks.