- 6 Minutes to read

- Print

- DarkLight

- PDF

PostgreSQL Change Data Capture (CDC) Overview

- 6 Minutes to read

- Print

- DarkLight

- PDF

What is Change Data Capture Extraction?

Rivery's Change Data Capture (CDC) extraction mode provides a real-time stream of any changes made to the databases and tables configured, eliminating the need to implement and maintain incremental fields or retrieve data via select queries. It also allows you to retrieve deleted rows and schema changes from the database.

How Does Change Data Capture Extraction Work?

The Change Data Capture (CDC) mechanism is based on aWrite-Ahead Log (WAL) provided by the PostgreSQL database. The logical decoding feature of PostgreSQL is available in versions 10 and higher.

In PostgreSQL, the logical decoding mechanism creates a consistent and easy-to-understand format in one place. The logical decoding mechanism in PostgreSQL, on the other hand, is based on converting the data written to the WAL using an output plugin that converts the WAL transaction rows into a dedicated format. To be able to consume data from the database, this output plugin must be installed on the PostgreSQL server and the logical decoding configuration must be enabled. See the PostgreSQL configuration docs for more information on setting up a PostgreSQL database for Rivery CDC extraction.

Please Note:

Rivery offers CDC extraction mode for PostgreSQL on both Google Cloud SQL and Amazon RDS / Aurora.

Rivery uses the output plugin to continuously pull new rows in the WAL in order to pull data using the CDC architecture. In order to get historical data from the database, Rivery cannot rely on the entire log history already existing prior to setting up a river. This is because PostgreSQL normally keeps track of the WAL and purges it after a certain amount of time.

Rivery uses the Overwrite loading mode to create a full snapshot (or migration) of the chosen table(s) in order to align the data and metadata as it was on the first run. After the migration is complete, Rivery takes the existing WAL records and performs an Upsert-merge to the target table(s), while continuing to fetch new records from the log as they are created.

Rivery's PostgreSQL connector reads WAL records and generates change events in the FileZone files for row-level INSERT, UPDATE, and DELETE commands. Each file represents a set of database operations performed over a period of time. The data from the log is continuously streamed into the FileZone path determined in the river (with timeframes of no more than 15 minutes) and pushed into the target by the river's scheduled frequency. This method saves the data first in the FileZone, and then it can be pushed into the target DWH at any time.

FileZone is covered in further detail in the Target documentation.

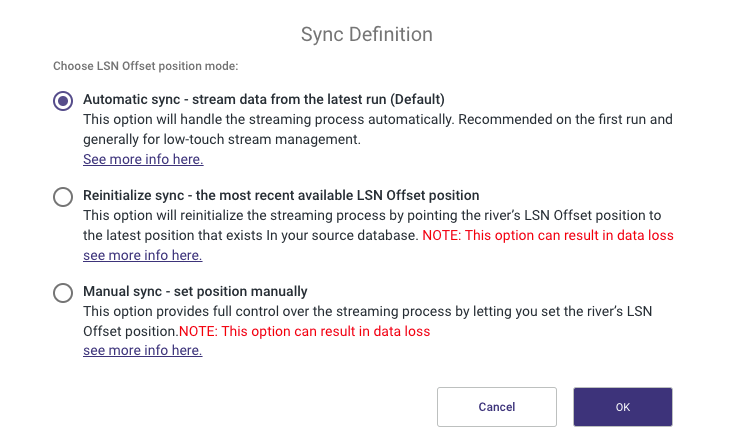

CDC Point in Time Position Feature

The CDC "Point in Time" Position feature allows users to gain deeper insights into the operational details of a River's streaming process. This functionality is essential for data recovery and synchronization, enabling users to locate and retrieve data from a specific point in history using the exact information stored in the CDC log position. For additional information, refer to our documentation.

A Sequence Change Data Capture Deployment

Discrepancies in transaction records can arise when two users simultaneously execute identical transactions, causing conflicts in the timestamp field.

Recognizing this challenge, Rivery has implemented a "sequence" Change Data Capture (CDC) mechanism to tackle this issue.

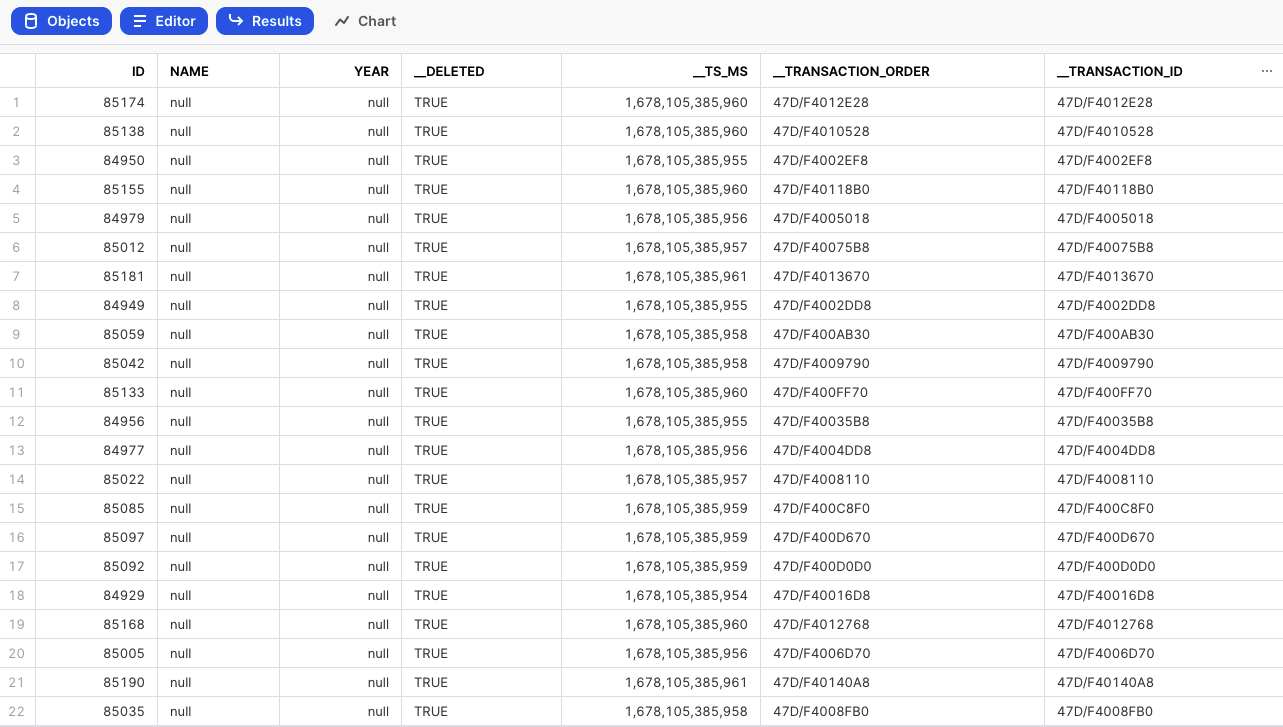

Rivery has enhanced each emitted record from the database by incorporating two extra metadata fields: '__transaction_id' and '__transaction_order'.

The '__transaction_id' field serves as a unique identifier for each transaction, ensuring that no two transactions share the same identifier. This uniqueness allows for precise identification and differentiation between transactions, mitigating conflicts arising from identical timestamps.

Furthermore, the '__transaction_order' field denotes the order in which the transactions were emitted from the database. By incorporating this field, the sequencing of transactions can be accurately maintained, enabling downstream systems such as Apache Kafka or AWS Kinesis to process and order transactions correctly.

The inclusion of these metadata fields guarantees that the ordering of transactions is preserved throughout the River. As a result, smooth and accurate transaction flows can be achieved, resolving the discrepancies that previously arose from transactions with identical timestamps.

The additional fields are depicted in this table:

For further information about Change Data Capture (CDC) Metadata Fields, please refer to our Database Overview document.

Partitioned Tables in PostgreSQL

Rivery supports PostgreSQL partitioned tables in both Standard Extraction and Change Data Capture (CDC).

For PostgreSQL versions 13 and later, partitioned tables can be replicated using logical replication. More details on logical replication limitations can be found in PostgreSQL's documentation.

Configuring Logical Replication for Partitioned Tables

To enable logical replication, you must create a publication for your tables. Publications allow selective replication, meaning you can add or remove tables dynamically. Only tables included in a publication will be replicated to Rivery. Each database can have multiple distinct publications.

Steps to Create a Publication

Ensure you have CREATE privileges or higher.

Create a publication for all tables using the following command:

Please Note:

The publication name 'rivery_pub' is provided as an example. The actual publication name must be unique for each database and cannot start with a number.

Partitioning Method Support

Rivery supports only declarative partitioning using 'CREATE TABLE ... PARTITION BY' command. This approach is required for proper change tracking in PostgreSQL version 13 and later.

Legacy inheritance-based partitioning is not supported.

Troubleshooting

Verifying Logical Replication

Before enabling CDC Streaming in Rivery, verify that logical replication is working correctly by running the following query:

If CDC streaming is already enabled on a River that reads from the same slot, the query will fail with the following error:

To resolve this issue:

- Disable CDC streaming on the relevant River.

- Run the query again to verify replication.

How to Enable Change Data Capture Extraction?

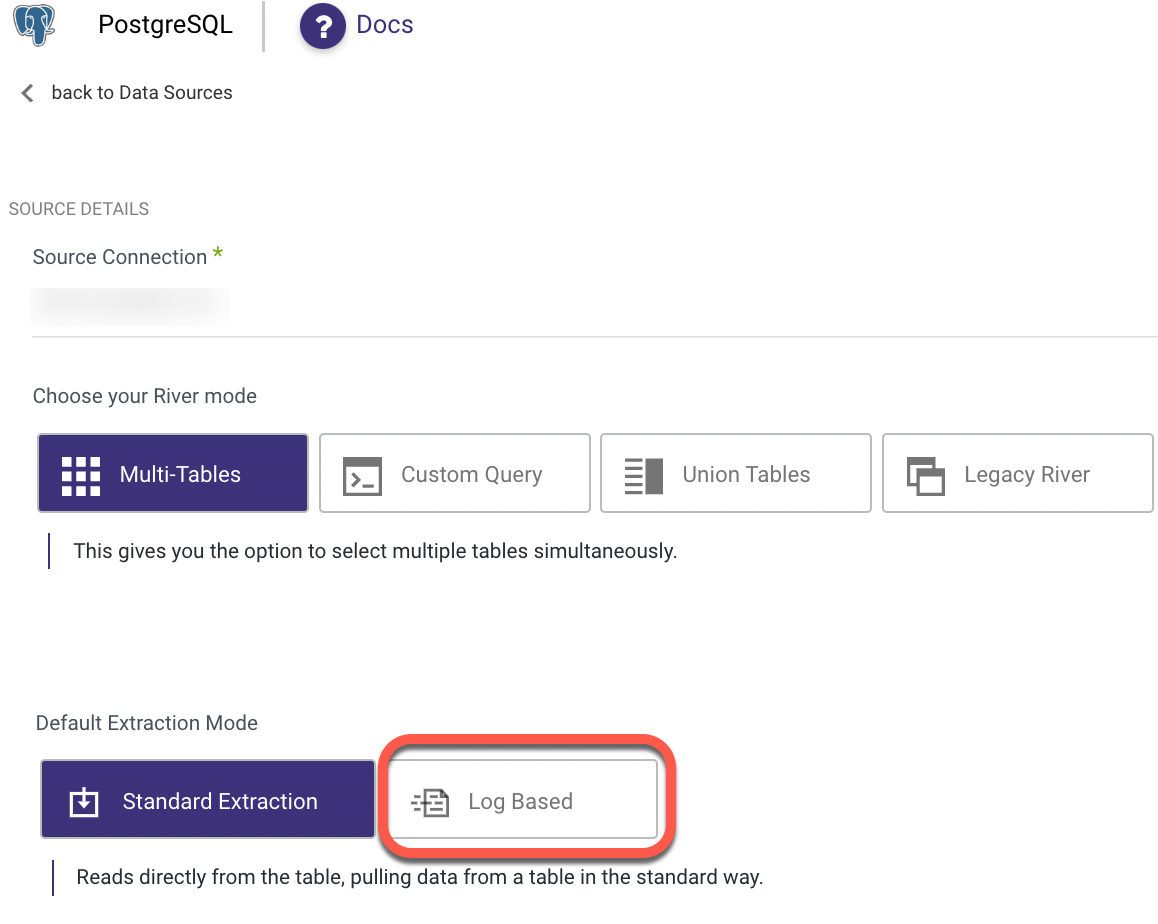

After you've established a connection, go to the Source tab and do the following:

- Choose the Multi-Tables as the River mode.

- Select the Log Based as the extraction mode.

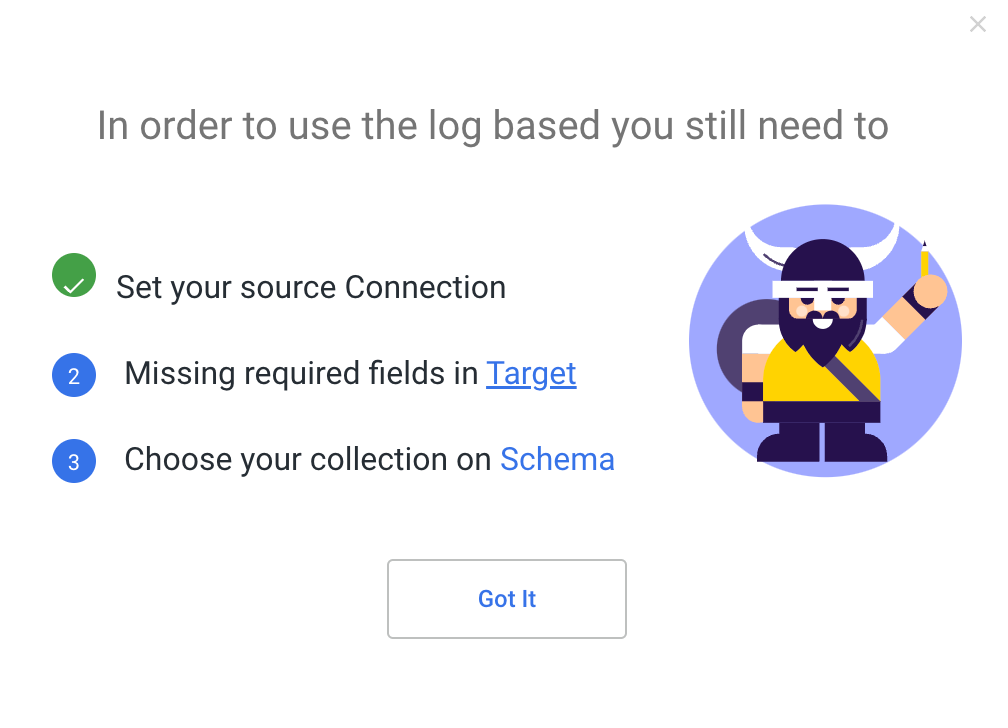

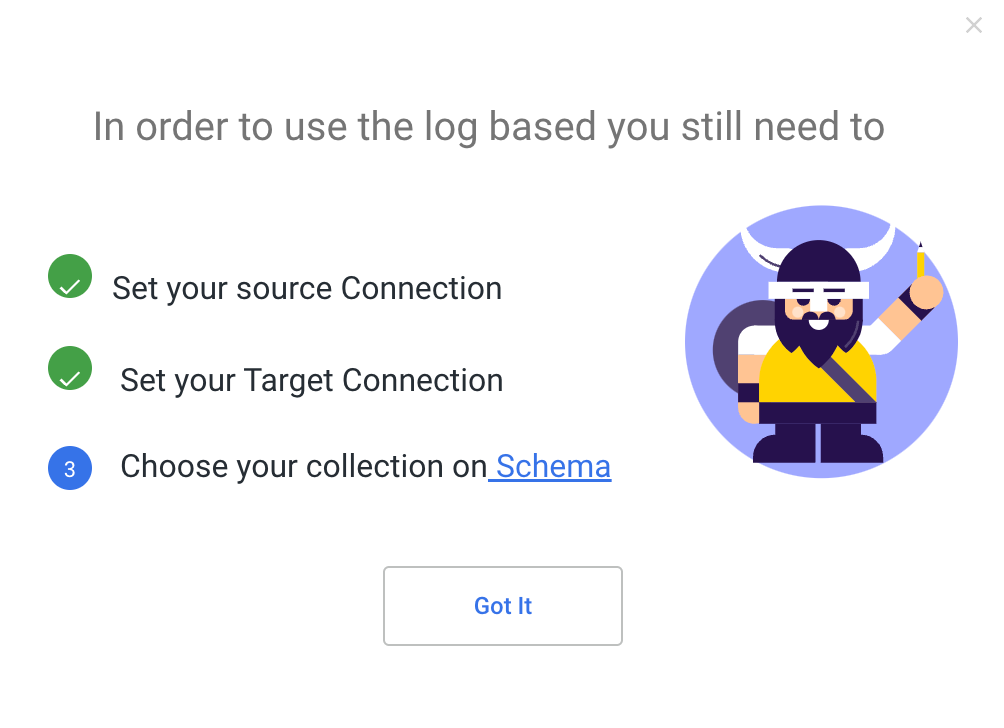

- A brief reminder appears, encouraging you to check your connection and set up your Source and Target, which will happen next. Select 'Got It' to proceed.

- Choose Log Based Version from the Advanced Options drop-down menu.

- Set the name of the custom replication slot (optional).

- Set a custom publication name (optional).

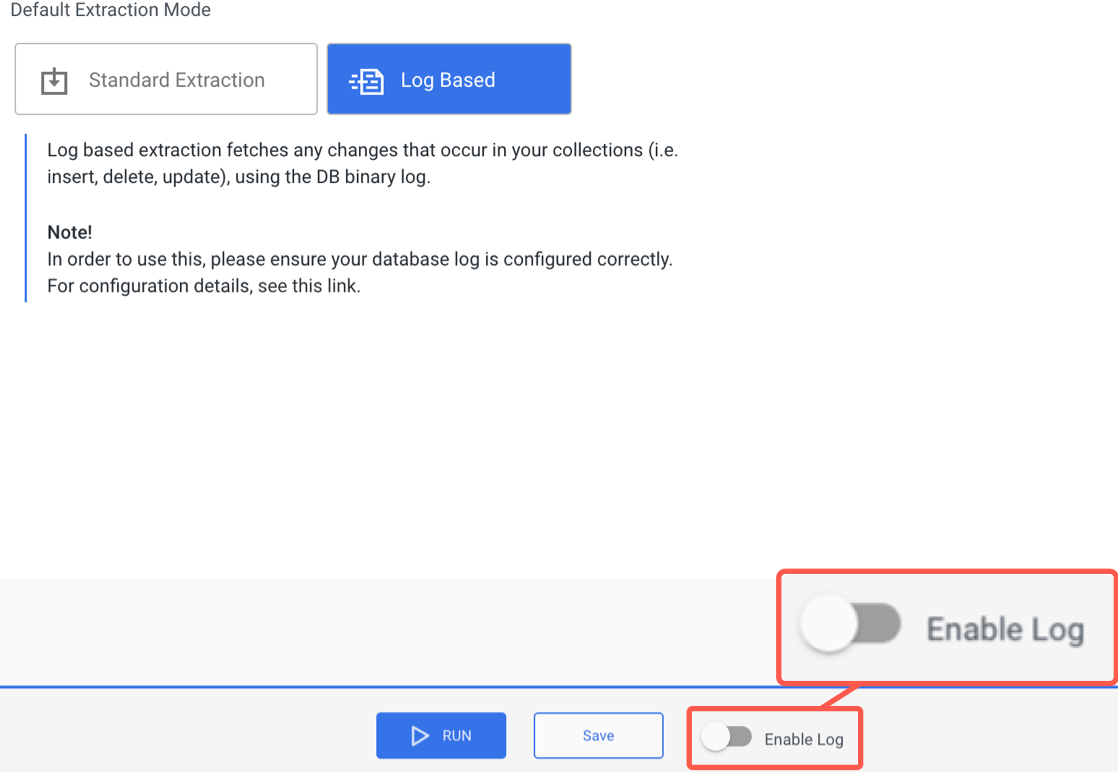

- Turn the 'Enable Log' toggle to true at the bottom of the page.

- A new window pops up, guiding you on the following steps. Select 'Target'.

- You'll be directly sent to the Target tab, where you can choose your data target.

- Define your Target Connection, then choose a Database and a Schema to load into.

- To make sure the operation is complete, click the Enable Log toggle once more.

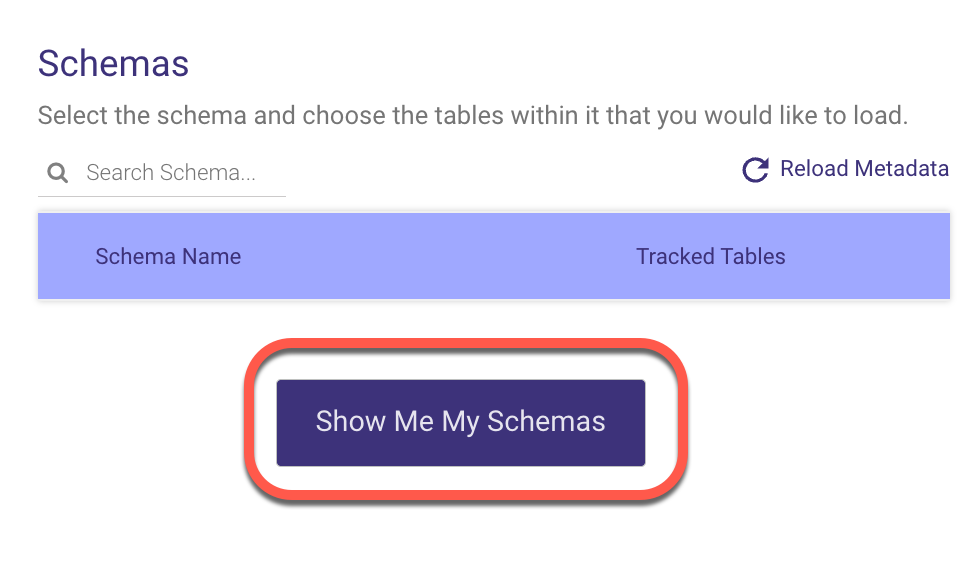

- To navigate to the Schema tab, click Schema.

- Click 'Show Me My Schemas'.

- A minimum of one Schema must be chosen.

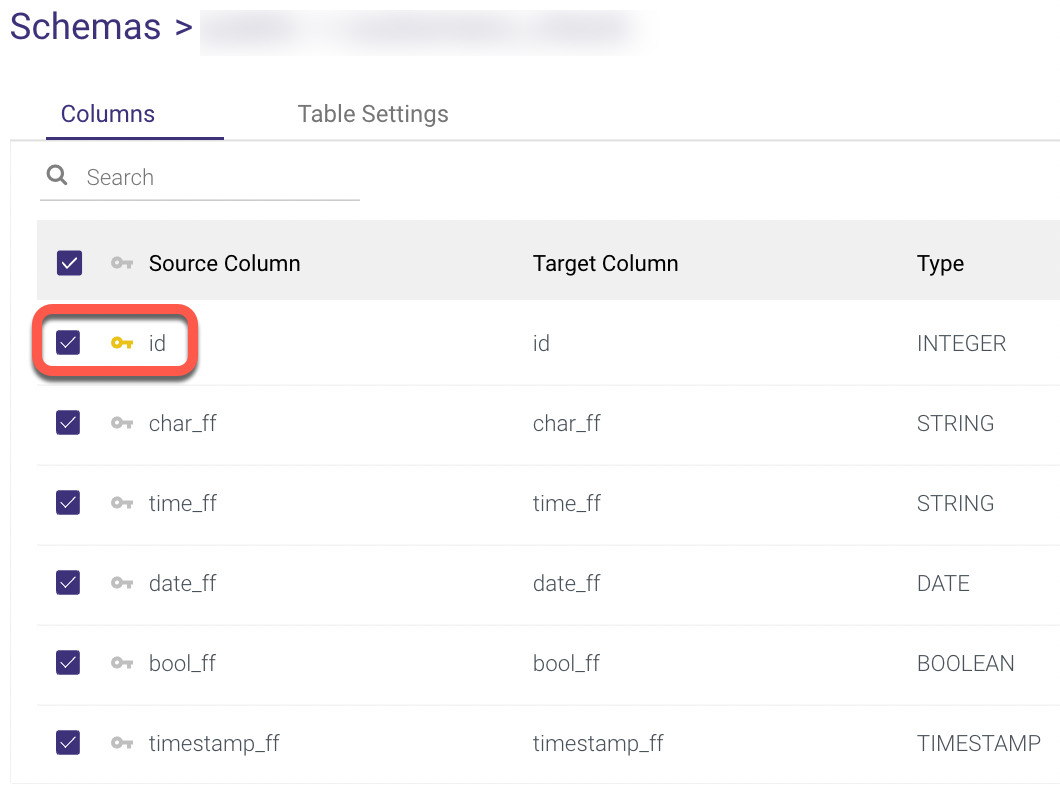

- To use CDC, the table must contain a key, which is defined as the Source Column - id.

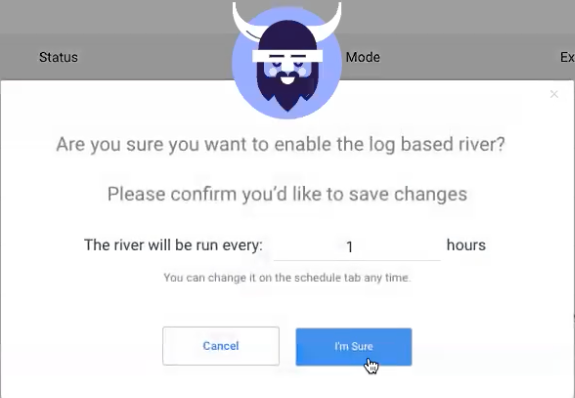

- Navigate to the 'Enable Log' toggle and select the number of hours you want to run the River, then click 'I'm Sure'.

- Wait for the Initiation Log to complete its processing.

- You're all set to run your log-based River now.

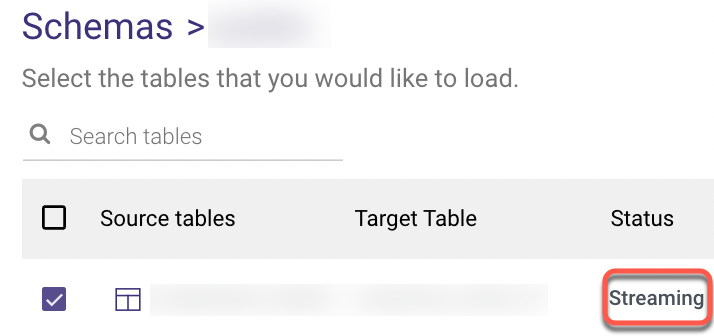

- Following the completion of the River Run, return to the Schema tab and check that the Table you selected is Streaming.

Type Mapping in CDC

While extracting your data, we match PostgreSQL data types to Rivery data types.

The mapping ofPostgreSQL data types to Rivery-compatible types is shown in the table below:

| PostgreSQL Type | Rivery Type |

| BOOLEAN | BOOLEAN |

| BYTEA | STRING |

| TOAST | -Not Supported- |

| CHAR | STRING |

| VARCHAR | STRING |

| TEXT | STRING |

| JSON | JSON |

| JSONB | JSON |

| DATE | STRING |

| FLOAT4 | STRING |

| FLOAT8 | STRING |

| INT2 | INTEGER |

| INT4 | INTEGER |

| INT8 | INTEGER |

| INTERVAL | STRING |

| NUMERIC | FLOAT |

| TIME | STRING |

| TIMESTAMP | STRING |

| TIMESTAMPTZ | STRING |

| UUID | STRING |

| XML | STRING |

Limitations

- The 'TOAST' file format is not supported.

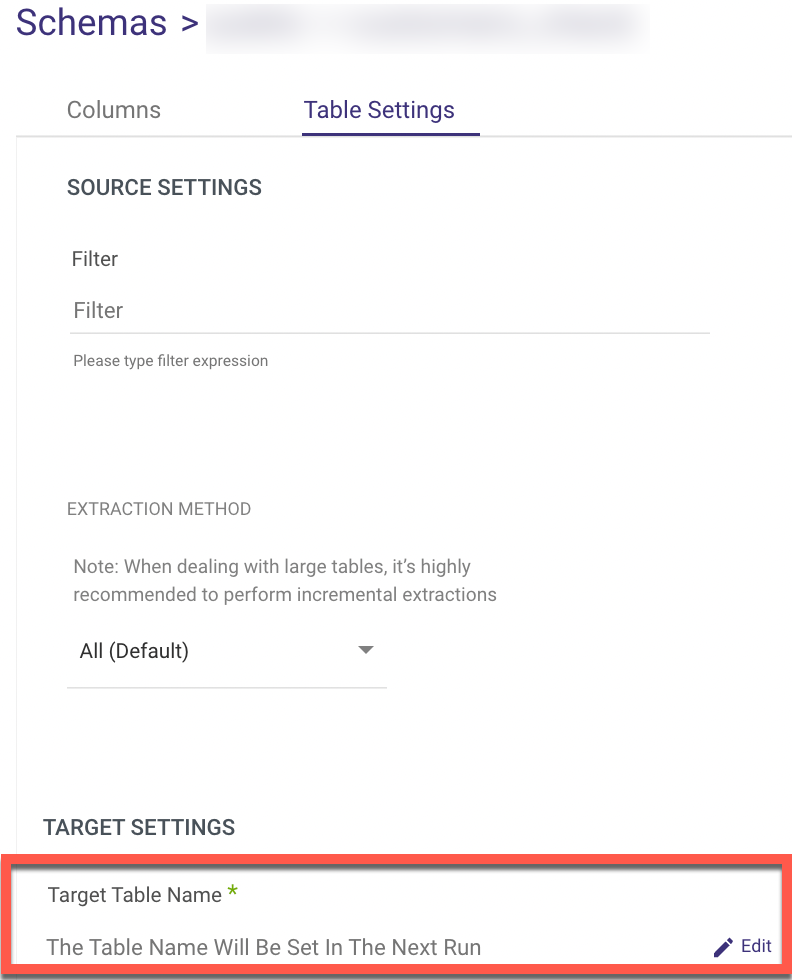

- Any special character will be replaced with an underscore. As a result, if you wish to edit the table name, go to:

1. The 'Schema' tab

2. Select a Table

3. Click 'Table Settings'

4. Choose 'Edit' to change the table name manually.

Current Stream Position in Your Database

To confirm the Stream position, run the following command on the server:

SELECT pg_current_wal_lsn() - '0/0' AS lsn_position;