- 1 Minute to read

- Print

- DarkLight

- PDF

Netsuite RESTlets Walkthrough

- 1 Minute to read

- Print

- DarkLight

- PDF

NetSuite RESTlets allow you to develop custom RESTful web services from your NetSuite account using SuiteScript.

Prerequisite

- Valid NetSuite RESTlets connector in Rivery. If you don't have a connection set up yet, follow the steps here.

Pulling data

- In the Source tab of a Source to Target river, select Nestuite RESTlets.

- Select the connection from the dropdown.

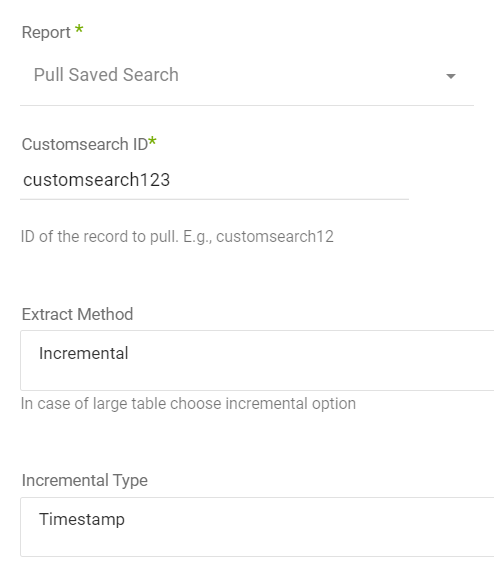

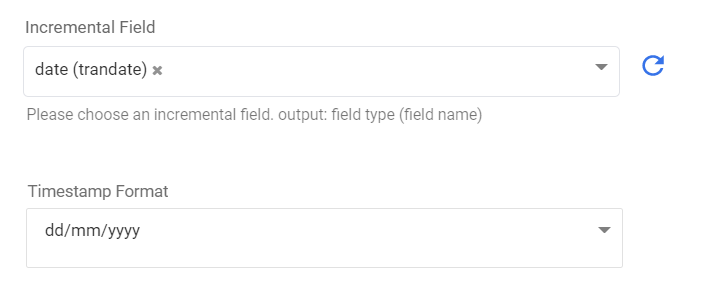

- Make sure to match the Timestamp with the metadata date's format. Please keep in mind that the only available report is 'Pull Saved Search.'

- Select the extract method and filters.

- When using extract method: incremental, make sure to match Incremental Type with the incremental field's type.

Limitation

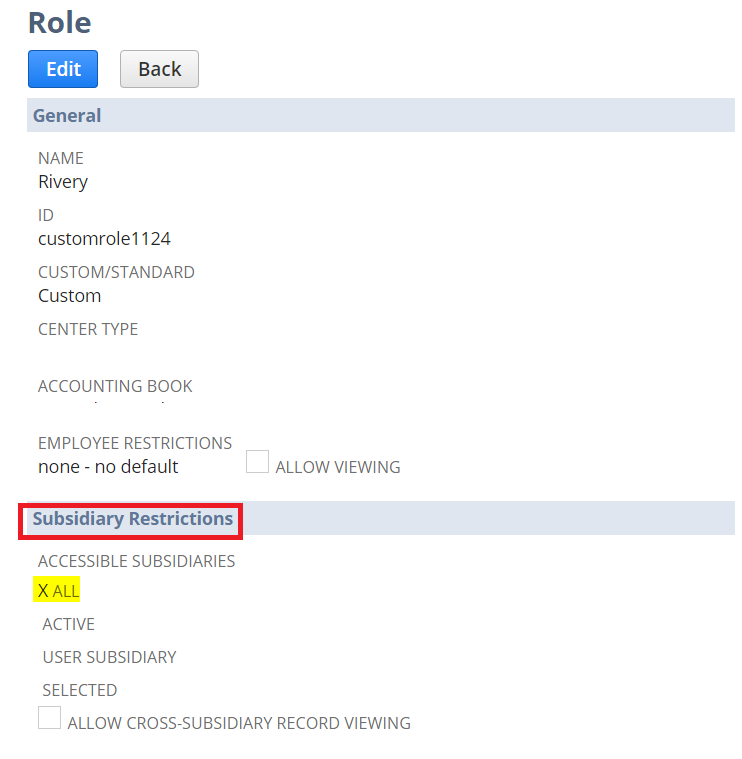

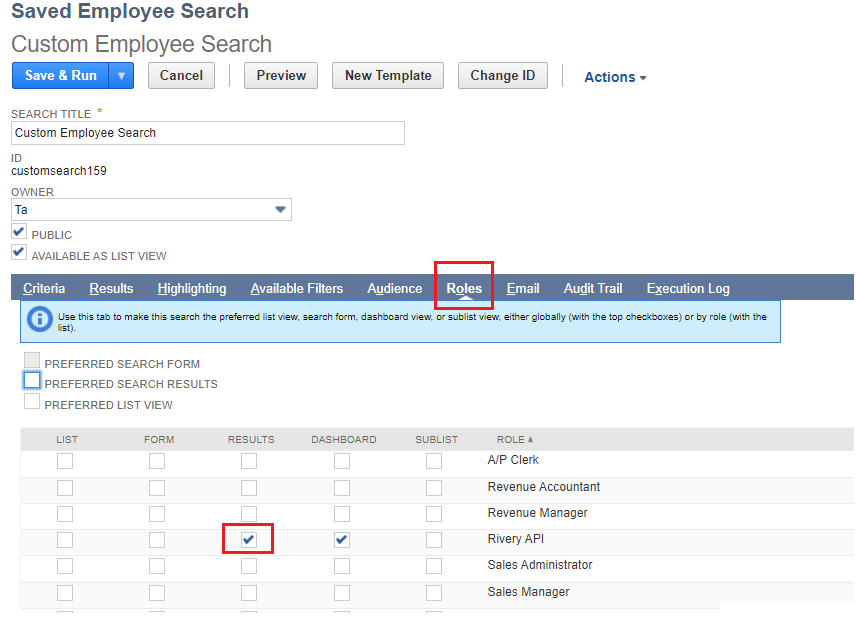

Each Customsearch report has a role and other permissions which are found in its settings. You will need to make sure it has proper role and permission, otherwise, less data or no data at all will return from that report. Below is an example of permission needed to fetch all records. This example showed us that when the restriction was not set to all accessible subsidiaries fewer data would return.

Missing Roles

If you can't see the role (Rivery) you created for reading permission web services - please follow these steps:

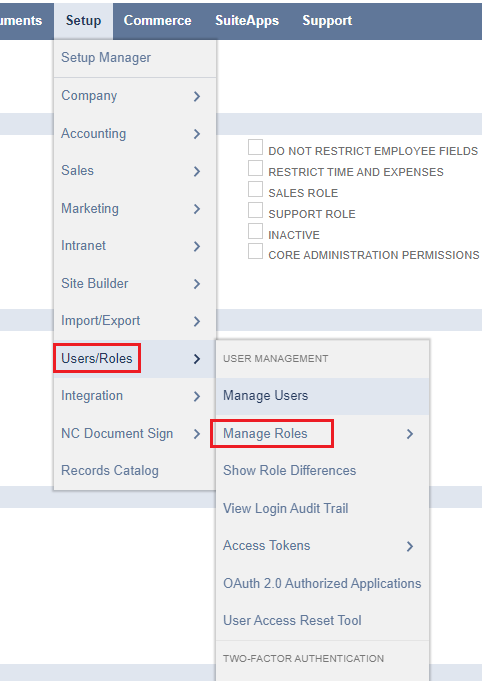

1. Go into Setup > Users/Roles > Manage Roles

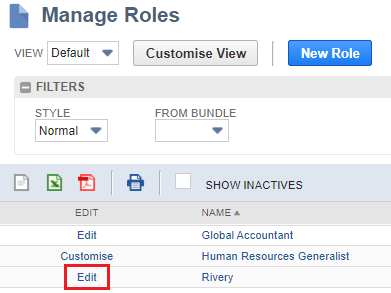

2. Go into Rivery's role (which you set to allow us to read data via third-party apps)

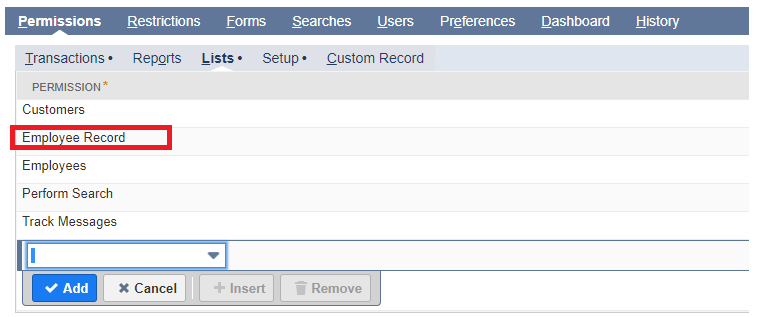

3. Add the role you need (e.g., Employee Records in the relevant tab) and click save.

4. refresh the custom search roles and set the results checked for Rivery's role.

Rate Limitation

When you encounter the error message indicating that the script execution time has surpassed the permitted duration, referred to as "SSS_TIME_LIMIT_EXCEEDED," it implies that the script or operation is taking longer than allowed to finish.

Here's an example of the error code:

Error in netsuite_restlets response: 400-{'code': 'SSS_TIME_LIMIT_EXCEEDED', 'message': 'Script Execution Time Exceeded.'}"

To address this issue, you may consider Implementing batch processing. If your script handles substantial data volumes, dividing it into smaller batches could be beneficial. This approach can distribute the workload evenly and prevent timeouts from occurring.